COGNITIVE TASKS:MODELS OF HUMAN COGNITION AND DESIGN PRINCIPLES

MODELS OF HUMAN COGNITION AND DESIGN PRINCIPLES

Models of human cognition that have been extensively used in ergonomics to develop guidelines and design principles fall into two broad categories. Models in the first category have been based on the classical paradigm of experimental psychology—also called the behavioral approach—focusing mainly on information-processing stages. Behavioral models view humans as ‘‘fallible machines’’ and try to determine the limitations of human cognition in a neutral fashion independent from the context of performance, the goals and intentions of the users, and the background or history of previous actions. On the other hand, more recent models of human cognition have been developed mainly through field studies and the analysis of real-world situations. Quite a few of these cognitive models have been inspired by Gibson’s (1979) work on ecological psychology, emphasizing the role of a person’s intentions, goals, and history as central determinants of human behavior.

The human factors literature is rich in behavioral and cognitive models of human performance. Because of space limitations, however, only three generic models of human performance will be presented here. They have found extensive applications. Section 2.1 presents a behavioral model developed by Wickens (1992), the human information-processing model. Sections 2.2 and 2.3 present two cognitive models, the action-cycle model of Norman (1988) and the skill-, rule-, and knowledge- based model of Rasmussen (1986).

The Human Information-Processing Model

The Model

The experimental psychology literature is rich in human performance models that focus on how humans perceive and process information. Wickens (1992) has summarized this literature into a generic model of information processing (Figure 1). This model draws upon a computer metaphor whereby information is perceived by appropriate sensors, help-up and processed in a temporal mem- ory (i.e., the working memory or RAM memory), and finally acted upon through dedicated actuators. Long-term memory (corresponding to a permanent form of memory, e.g., the hard disk) can be used to store well-practiced work methods or algorithms for future use. A brief description of the human information-processing model is given on page 1015.

Information is captured by sensory systems or receptors, such as the visual, auditory, vestibular, gustatory, olfactive, tactile, and kinesthetic systems. Each sensory system is equipped with a central storage mechanism called short-term sensory store (STSS) or simply short-term memory. STSS pro- longs a representation of the physical stimulus for a short period after the stimulus has terminated. It appears that environmental information is stored in the STSS even if attention is diverted elsewhere. STSS is characterized by two types of limitations: (1) the storage capacity, which is the amount of information that can be stored in the STSS, and (2) the decay, which is how long information stays in the STSS. Although there is strong experimental evidence about these limitations, there is some controversy regarding the numerical values of their limits. For example, experiments have shown that the short-term visual memory capacity varies from 7 to 17 letters and the auditory capacity from 4.4 to 6.2 letters (Card et al. 1986). With regard to memory decay rate, experiments have shown that it varies from 70 to 1000 msec for visual short-term memory whereas for the auditory short-term memory the decay varies from 900 to 3500 msec (Card et al. 1986).

In the next stage, perception, stimuli stored in STSS are processed further, that is, the perceiver becomes conscious of their presence, he / she recognizes, identifies, or classifies them. For example, the driver first sees a ‘‘red’’ traffic light, then detects it, recognizes that it is a signal related to the driving task, and identifies it as a stop sign. A large number of different physical stimuli may be assigned to a single perceptual category. For example, a, A, a, a and the sound ‘‘a’’ all generate the same categorical perception: the letter ‘‘a.’’ At the same time, other characteristics or dimensions of the stimulus are also processed, such as whether the letter is spoken by a male or female voice, whether it is written in upper- or lowercase, and so on.

This example shows that stimulus perception and encoding is dependent upon available attention resources and personal goals and knowledge as stored in the long-term memory (LTM). This is the memory where perceived information and knowledge acquired through learning and training are stored permanently. As in working memory, the information in long-term memory can have any combination of auditory, spatial, and semantic characteristics. Knowledge can be either procedural (i.e., how to do things) or declarative (i.e., knowledge of facts). The goals of the person provide a workspace within which perceived stimuli and past experiences or methods retrieved from LTM are combined and processed further. This workspace is often called working memory because the person has to be conscious of and remember all presented or retrieved information. However, the capacity of working memory also seems to be limited. Miller (1956) was the first to define the capacity of working memory. In a series of experiments where participants carried out absolute judgment tasks, Miller found that the capacity of working memory varied between five and nine items or ‘‘chunks’’* of information when full attention was deployed. Cognitive processes, such as diagnosis, decision making, and planning, can operate within the same workspace or working memory space. Attention is the main regulatory mechanism for determining when control should pass from perception to cognitive processes or retrieval processes from LTM.

* The term chunk is used to define a set of adjacent stimulus units that are closely tied together by associations in a person’s long-term memory. A chunk may be a letter, a digit, a word, a phrase, a shape, or any other unit.

At the final stage of information processing (response execution), the responses chosen in the previous stages are executed. A response can be any kind of action, such as eye or head movements, hand or leg movement, and verbal responses. Attention resources are also required at this stage because intrinsic (e.g., kinesthetic) and / or extrinsic feedback (e.g., visual, auditory) can be used to monitor the consequences of the actions performed.

A straightforward implication of the information-processing model shown in Figure 1 is that performance can become faster and more accurate when certain mental functions become ‘‘auto- mated’’ through increased practice. For instance, familiarity with machine drawings may enable main- tenance technicians to focus on the cognitive aspects of the task, such as troubleshooting; less-experienced technicians would spend much more time in identifying technical components from the drawings and carrying out appropriate tests. As people acquire more experience, they are better able to time-share tasks because well-practiced aspects of the job become automated (that is, they require less attention and effort).

Practical Implications

The information-processing model has been very useful in examining the mechanisms underlying several mental functions (e.g., perception, judgment, memory, attention, and response selection) and the work factors that affect them. For instance, signal detection theory (Green and Swets 1988) describes a human detection mechanism based on the properties of response criterion and sensitivity. Work factors, such as knowledge of action results, introduction of false signals, and access to images of defectives, can increase human detection and provide a basis for ergonomic interventions. Other mental functions (e.g., perception) have also been considered in terms of several processing modes, such as serial and parallel processing, and have contributed to principles for designing man–machine interfaces (e.g., head-up displays that facilitate parallel processing of data superimposed on one another). In fact, there are several information-processing models looking at specific mental functions, all of which subscribe to the same behavioral approach.

Because information-processing models have looked at the mechanisms of mental functions and the work conditions that degrade or improve them, ergonomists have often turned to them to generate guidelines for interface design, training development, and job aids design. To facilitate information processing, for instance, control panel information could be cast into meaningful chunks, thus in- creasing the amount of information to be processed as a single perceptual unit. For example, dials and controls can be designed and laid out on a control panel according to the following ergonomic principles (Helander 1987; McCormick and Sanders 1987):

• Frequency of use and criticality: Dials and controls that are frequently used, or are of special importance, should be placed in prominent positions, for example in the center of the control panel.

• Sequential consistency: When a particular procedure is always executed in a sequential order, controls and dials should be arranged according this order.

• Topological consistency: Where the physical location of the controlled items is important, the layout of dials should reflect their geographical arrangement.

• Functional grouping: Dials and controls that are related to a particular function should be placed together.

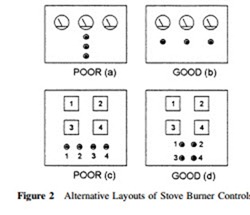

Many systems, however, fail to adhere to these principles—for example, the layout of stove burner controls fails to conform to the topological consistency principle (see Figure 2). Controls located beside their respective burners (Figure 2[b] and 2[d ]) are compatible and will eliminate confusions caused by arrangements shown in Figure 2(a) and 2(c).

Information-processing models have also found many applications in the measurement of mental workload. The limited-resource model of human attention (Allport 1980) has been extensively used to examine the degree to which different tasks may rely upon similar psychological resources or mental functions. Presumably, the higher the reliance upon similar mental functions, the higher the mental workload experienced by the human operator. Measures of workload, hence, have tended to rely upon the degree of sharing similar mental functions and their limited capacities.

Information-processing models, however, seem insufficient to account for human behavior in many cognitive tasks where knowledge and strategy play an important role. Recent studies in tactical decision making (Serfaty et al. 1998), for instance, have shown that experienced operators are able to maintain their mental workload at low levels, even when work demands increase, because they can change their strategies and their use of mental functions. Under time pressure, for instance, crews may change from an explicit mode of communication to an implicit mode whereby information is made available without previous requests; the crew leader may keep all members informed of the ‘‘big picture’’ so that they can volunteer information when necessary without excessive communi- cations. Performance of experienced personnel may become adapted to the demands of the situation,

thus overcoming high workload imposed by previous methods of work and communication. This interaction of expert knowledge and strategy in using mental functions has been better explored by two other models of human cognition that are described below.

The Action-Cycle Model

The Model

Many artifacts seem rather difficult to use, often leading to frustrations and human errors. Norman (1988) was particularly interested in how equipment design could be benefit from models of human performance. He developed the action-cycle model (see Figure 3), which examines how people set themselves and achieve goals by acting upon the external world. This action–perception cycle entails two main cognitive processes by which people implement goals (i.e., execution process) and make further adjustments on the basis of perceived changes and evaluations of goals and intentions (i.e., evaluation process).

The starting point of any action is some notion of what is wanted, that is, the goal to be achieved. In many real tasks, this goal may be imprecisely specified with regard to actions that would lead to the desired goal, such as, ‘‘I want to write a letter.’’ To lead to actions, human goals must be transformed into specific statements of what is to be done. These statements are called intentions. For example, I may decide to write the letter by using a pencil or a computer or by dictating it to my secretary. To satisfy intentions, a detailed sequence of actions must be thought of (i.e., planning) and executed by manipulating several objects in the world. On the other hand, the evaluation side of

things has three stages: first, perceiving what happened in the world; second, making sense of it in terms of needs and intentions (i.e., interpretation); and finally, comparing what happened with what was wanted (i.e., evaluation). The action-cycle model consists of seven action stages: one for goals (forming the goal), three for execution (forming the intention, specifying actions, executing actions), and three for evaluation (perceiving the state of the world, interpreting the state of the world, eval- uating the outcome).

As Norman (1988, p. 48) points out, the action-cycle model is an approximate model rather than a complete psychological theory. The seven stages are almost certainly not discrete entities. Most behavior does not require going through all stages in sequence, and most tasks are not carried out by single actions. There may be numerous sequences, and the whole task may last hours or even days. There is a continual feedback loop, in which the results of one action cycle are used to direct further ones, goals lead to subgoals, and intentions lead to subintentions. There are action cycles in which goals are forgotten, discarded, or reformulated.

The Gulfs of Execution and Evaluation

The action-cycle model can help us understand many difficulties and errors in using artifacts. Diffi- culties in use are related to the distance (or amount of mental work) between intentions and possible physical actions, or between observed states of the artifact and interpretations. In other words, prob- lems arise either because the mappings between intended actions and equipment mechanisms are insufficiently understood, or because action feedback is rather poor. There are several gulfs that separate the mental representations of the person from the physical states of the environment (Norman 1988).

The gulf of execution reflects the difference between intentions and allowable actions. The more the system allows a person to do the intended actions directly, without any extra mental effort, the smaller the gulf of execution is. A small gulf of execution ensures high usability of equipment. Consider, for example, faucets for cold and hot water. The user intention is to control two things or parameters: the water temperature and the volume. Consequently, users should be able to do that with two controls, one for each parameter. This would ensure a good mapping between intentions and allowable actions. In conventional settings, however, one faucet controls the volume of cold water and the other the volume of hot water. To obtain water of a desired volume and temperature, users must try several combinations of faucet adjustments, hence losing valuable time and water. This is an example of bad mapping between intentions and allowable actions (a large gulf of execution).

The gulf of evaluation reflects the amount of effort that users must exert to interpret the physical state of the artifact and determine how well their intentions have been met. The gulf of evaluation is small when the artifact provides information about its state that is easy to get and easy to interpret and matches the way the user thinks of the artifact.

Using the Action-Cycle Model in Design

According to the action-cycle model, the usability of an artifact can be increased when its design bridges the gulfs of execution and evaluation. The seven-stage structure of the model can be cast as a list of questions to consider when designing artifacts (Norman 1988). Specifically, the designer may ask how easily the user of the artifact can:

1. Determine the function of the artifact (i.e., setting a goal)

2. Perceive what actions are possible (i.e., forming intentions)

3. Determine what physical actions would satisfy intentions (i.e., planning)

4. Perform the physical actions by manipulating the controls (i.e., executing)

5. Perceive what state the artifact is in (i.e., perceiving state)?

6. Achieve his or her intentions and expectations (i.e., interpreting states)

7. Tell whether the artifact is in a desired state or intentions should be changed (i.e., evaluating intentions and goals)

A successful application of the action-cycle model in the domain of human–computer interaction regards direct manipulation interfaces (Shneiderman 1983; Hutchins et al. 1986). These interfaces bridge the gulfs of execution and evaluation by incorporating the following properties (Shneiderman 1982, p. 251):

• Visual representation of the objects of interest

• Direct manipulation of objects, instead of commands with complex syntax

• Incremental operations that their effects are immediately visible and, on most occasions, re- versible

The action-cycle model has been translated into a new design philosophy that views design as knowledge in the world. Norman (1988) argues that the knowledge required to do a job can be distributed partly in the head and partly in the world. For instance, the physical properties of objects may constrain the order in which parts can be put together, moved, picked up, or otherwise manip- ulated. Apart from these physical constraints, there are also cultural constraints, which rely upon accepted cultural conventions. For instance, turning a part clockwise is the culturally defined standard for attaching a part to another while counterclockwise movement usually results in dismantling a part. Because of these natural and artificial or cultural constraints, the number of alternatives for any particular situation is reduced, as is the amount of knowledge required within human memory. Knowl- edge in the world, in terms of constraints and labels, explains why many assembly tasks can be performed very precisely even when technicians cannot recall the sequence they followed in dis- mantling equipment; physical and cultural constraints reduce the alternative ways in which parts can be assembled. Therefore, cognitive tasks are more easily done when part of the required knowledge is available externally—either explicit in the world (i.e., labels) or readily derived through constraints.

The Skill-, Rule-, and Knowledge-Based Model

The Model

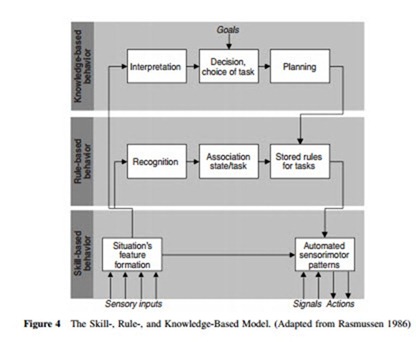

In a study of troubleshooting tasks in real work situations, Rasmussen (1983) observed that people control their interaction with the environment in different modes. The interaction depends on a proper match between the features of the work domain and the requirements of control modes. According to the skill-, rule-, and knowledge-based model (SRK model), control and performance of human activities seem to be a function of a hierarchically organized control system (Rasmussen et al. 1994). Cognitive control operates at three levels: skill-based, or automatic control; rule-based, or conditional control; and knowledge-based, or compensatory control (see Figure 4).

At the lowest level, skill-based behavior, human performance is governed by patterns of prepro- grammed behaviors represented as analog structures in a time–space domain in human memory. This mode of behavior is characteristic of well-practiced and routine situations whereby open-loop or feedforward control makes performance faster. Skill-based behavior is the result of extensive practice where people develop a repertoire of cue–response patterns suited to specific situations. When a familiar situation is recognized, a response is activated, tailored, and applied to the situation. Neither any conscious analysis of the situation nor any sort of deliberation of alternative solutions is required.

At the middle level, rule-based behavior, human performance is governed by conditional rules of the type:

Initially, stored rules are formulated at a general level. They subsequently are supplemented by further details from the work environment. Behavior at this level requires conscious preparation: first rec- ognition of the need for action, followed by retrieval of past rules or methods and finally, composition of new rules through either self-motivation or through instruction. Rule-based behavior is slower and more cognitively demanding than skill-based behavior. Rule-based behavior can be compared to the function of an expert system where situations are matched to a database of production rules and responses are produced by retrieving or combining different rules. People may combine rules into macrorules by collapsing conditions and responses into a single unit. With increasing practice, these macrorules may become temporal–spatial patterns requiring less conscious attention; this illustrates the transition from rule-based to skill-based behavior.

At the highest level, knowledge-based behavior, performance is governed by a thorough analysis of the situation and a systematic comparison of alternative means for action. Goals are explicitly formulated and alternative plans are compared rationally to maximize efficiency and minimize risks. Alternatives are considered and tested either physically, by trial and error, or conceptually, by means of thought experiments. The way that the internal structure of the work system is represented by the user is extremely important for performance. Knowledge-based behavior is slower and more cogni- tively demanding than rule-based behavior because it requires access to an internal or mental model of the system as well as laborious comparisons of work methods to find the most optimal one.

Knowledge-based behavior is characteristic of unfamiliar situations. As expertise evolves, a shift to lower levels of behavior occurs. This does not mean, however, that experienced operators always work at the skill-based level. Depending on the novelty of the situation, experts may move at higher levels of behavior when uncertain about a decision. The shift to the appropriate level of behavior is another characteristic of expertise and graceful performance in complex work environments.

Using the SRK Model

The SRK model has been used by Reason (1990) to provide a framework for assigning errors to several categories related to the three levels of behavior. Deviations from current intentions due to execution failures and / or storage failures, for instance, are errors related to skill-based behavior (slips and lapses). Misclassifications of the situation, leading to the application of the wrong rule or incorrect procedure, are errors occurring at the level rule-based behavior. Finally, errors due to limitations in cognitive resources—‘‘bounded rationality’’—and incomplete or incorrect knowledge are character- istic of knowledge-based behavior. More subtle forms of errors may occur when experienced operators fail to shift to higher levels of behavior. In these cases, operators continue skill- or rule-based behavior although the situation calls for analytical comparison of options and reassessment of the situation (e.g., knowledge-based processing).

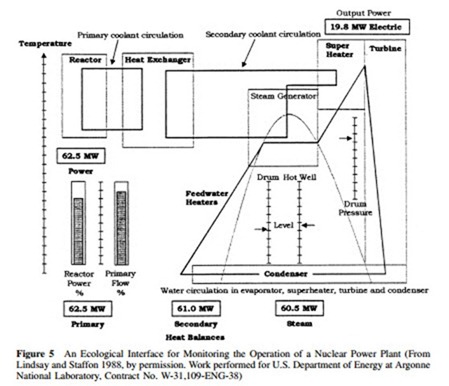

The SRK model also provides a useful framework for designing man–machine interfaces for complex systems. Vicente and Rasmussen (1992) advance the concept of ecological interfaces that exploit the powerful human capabilities of perception and action, at the same time providing appro- priate support for more effortful and error-prone cognitive processes. Ecological interfaces aim at presenting information ‘‘in such a way as not to force cognitive control to a higher level than the demands of the task require, while at the same time providing the appropriate support for all three levels’’ (Vicente and Rasmussen 1992, p. 598). In order to achieve these goals, interfaces should obey the following three principles:

1. Support skill-based behavior: The operator should be able act directly on the display while the structure of presented information should be isomorphic to the part–whole structure of eye and hand movements.

2. Support rule-based behavior: The interface should provide a consistent one-to-one mapping between constraints of the work domain and cues or signs presented on the interface.

3. Support knowledge-based behavior: The interface should represent the work domain in the form of an abstraction hierarchy* to serve as an externalized mental model that supports problem solving.

* Abstraction hierarchy is a framework proposed by Rasmussen (1985; Rasmussen et al. 1994) that is useful for representing the cognitive constraints of a complex work environment. For example, the constraints related to process control have been found to belong to five hierarchically ordered levels (Vicente and Rasmussen 1992, p. 592): the purpose for which the system was designed (functional purpose); the intended causal structure of the process in terms of mass, energy, information, or value flows (abstract purpose); the basic functions that the plant is designed to achieve (generalized function); the characteristics of the components and the connections between them (physical function); and the appearance and special location of these components (physical form).

An example of an ecological interface that supports direct perception of higher-level functional properties is shown in Figure 5. This interface, designed by Lindsay and Staffon (1988), is used for monitoring the operation of a nuclear power plant. Graphical patterns show the temperature profiles of the coolant in the primary and secondary circulation systems as well as the profiles of the feedwater in the steam generator, superheater, turbine, and condenser. Note that while the display is based on primary sensor data, the functional status of the system can be directly read from the interrelationships among data. The Rankine cycle display presents the constraints of the feedwater system in a graphic visualization format so that workers can use it in guiding their actions; in doing this, workers are able to rely on their perceptual processes rather than analytical processes (e.g., by having to solve a differential equation or engage in any other abstract manipulation). Plant transients and sensor failures can be shown as distortions of the rectangles representing the primary and secondary circulation, or as temperature points of the feedwater system outside the Rankine cycle. The display can be per- ceived, at the discretion of the observer, at the level of the physical implications of the temperature readings, of the state of the coolant circuits, and of the flow of the energy.

Comments

Post a Comment