PROBABILITY THEORY:MOMENTS

MOMENTS

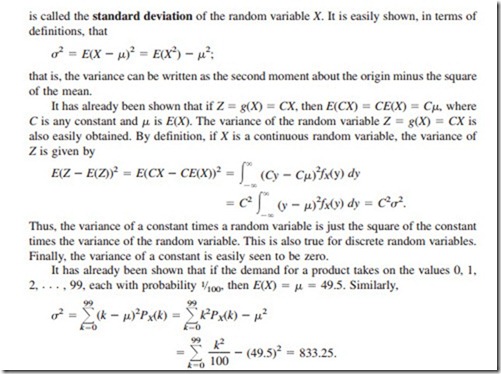

If the function g described in the preceding section is given by Z = g(X) = Xj, where j is a positive integer, then the expectation of Xj is called the jth moment about the origin of the random variable X and is given by

1The name for this theorem is motivated by the fact that a statistician often uses its conclusions without con- sciously worrying about whether the theorem is true.

Table 24.1 gives the means and variances of the random variables that are often use- ful in operations research. Note that for some random variables a single moment, the mean, provides a complete characterization of the distribution, e.g., the Poisson random variable. For some random variables the mean and variance provide a complete characterization of the distribution, e.g., the normal. In fact, if all the moments of a probability distribution are known, this is usually equivalent to specifying the entire distribution.

It was seen that the mean and variance may be sufficient to completely characterize a distribution, e.g., the normal. However, what can be said, in general, about a random variable whose mean and variance 2 are known, but nothing else about the form of the distribution is specified? This can be expressed in terms of Chebyshev’s inequality, which states that for any positive number C,

where X is any random variable having mean and variance 2. For example, if C 3,

if follows that P{ 3 X 3 } 1 1/9 0.8889. However, if X is known to have a normal distribution, then P{ 3 X 3 } 0.9973. Note that the Chebyshev inequality only gives a lower bound on the probability (usually a very con- servative one), so there is no contradiction here.

Comments

Post a Comment