HUMAN FACTORS AUDIT:AUDIT SYSTEM DESIGN

AUDIT SYSTEM DESIGN

As outlined in Section 2, the audit system must choose a sample, measure that sample, evaluate it, and communicate the results. In this section we approach these issues systematically.

An audit system is not just a checklist; it is a methodology that often includes the technique of a checklist. The distinction needs to be made between methodology and techniques. Over three decades ago, Easterby (1967) used Bainbridge and Beishon’s (1964) definitions:

Methodology: a principle for defining the necessary procedures

Technique: a means to execute a procedural step.

Easterby notes that a technique may be applicable in more than one methodology.

The Sampling Scheme

In any sampling, we must define the unit of sampling, the sampling frame, and the sample choice technique. For a human factors audit the unit of sampling is not as self-evident as it appears. From a job-evaluation viewpoint (e.g., McCormick 1979), the natural unit is the job that is composed of a number of tasks. From a medical viewpoint the unit would be the individual. Human factors studies focus on the task / operator / machine / environment (TOME) system (Drury 1992) or equivalently the software / hardware / environment / liveware (SHEL) system (ICAO 1989). Thus, from a strictly human factors viewpoint, the specific combination of TOME can become the sampling unit for an audit program.

Unfortunately, this simple view does not cover all of the situations for which an audit program may be needed. While it works well for the rather repetitive tasks performed at a single workplace, typical of much manufacturing and service industry, it cannot suffice when these conditions do not hold. One relaxation is to remove the stipulation of a particular incumbent, allowing for jobs that require frequent rotation of tasks. This means that the results for one task will depend upon the incumbent chosen, or that several tasks will need to be combined if an individual operator is of interest. A second relaxation is that the same operator may move to different workplaces, thus chang- ing environment as well as task. This is typical of maintenance activities, where a mechanic may perform any one of a repertoire of hundreds of tasks, rarely repeating the same task. Here the rational sampling unit is the task, which is observed for a particular operator at a particular machine in a particular environment. Examples of audits of repetitive tasks (Mir 1982; Drury 1990a) and main- tenance tasks (Chervak and Drury 1995) are given below to illustrate these different approaches.

Definition of the sampling frame, once the sampling unit is settled, is more straightforward. Whether the frame covers a department, a plant, a division, or a whole company, enumeration of all sampling units is at least theoretically possible. All workplaces or jobs or individuals can in principle be listed, although in practice the list may never be up to date in an agile industry where change is the normal state of affairs. Individuals can be listed from personnel records, tasks from work orders or planning documents, and workplaces from plant layout plans. A greater challenge, perhaps, is to decide whether indeed the whole plant really is the focus of the audit. Do we include office jobs or just production? What about managers, chargehands, part-time janitors, and so on? A good human factors program would see all of these tasks or people as worthy of study, but in practice they may have had different levels of ergonomic effort expended upon them. Should some tasks or groups be excluded from the audit merely because most participants agree that they have few pressing human factors problems? These are issues that need to be decided explicitly before the audit sampling begins.

Choice of the sample from the sampling frame is well covered in sociology texts. Within human factors it typically arises in the context of survey design (Sinclair 1990). To make statistical inferences from the sample to the population (specifically to the sampling frame), our sampling procedure must allow the laws of probability to be applied. The most often-used sampling methods are:

Random sampling: Each unit within the sampling frame is equally likely to be chosen for the sample. This is the simplest and most robust method, but it may not be the most efficient. Where subgroups of interest (strata) exist and these subgroups are not equally represented in the sampling frame, one collects unnecessary information on the most populous subgroups and insufficient information on the least populous. This is because our ability to estimate a population statistic from a sample depends upon the absolute sample size and not, in most practical cases, on the population size. As a corollary, if subgroups are of no interest, then random sampling loses nothing in efficiency.

Stratified random sampling: Each unit within a particular stratum of the sampling frame is equally likely to be chosen for the sample. With stratified random sampling we can make valid infer- ences about each of the strata. By weighting the statistics to reflect the size of the strata within the sampling frame, we can also obtain population inferences. This is often the preferred auditing sampling method as, for example, we would wish to distinguish between different classes of tasks in our audits: production, warehouse, office, management, maintenance, se- curity, and so on. In this way our audit interpretation could give more useful information concerning where ergonomics is being used appropriately.

Cluster sampling: Clusters of units within the sampling frame are selected, followed by random or nonrandom selection within clusters. Examples of clusters would be the selection of par- ticular production lines within a plant (Drury 1990a) or selection of representative plants within a company or division. The difference between cluster and stratified sampling is that in cluster sampling only a subset of possible units within the sampling frame is selected, whereas in stratified sampling all of the sampling frame is used because each unit must belong to one stratum. Because clusters are not randomly selected, the overall sample results will not reflect population values, so that statistical inference is not possible. If units are chosen randomly within each cluster, then statistical inference within each cluster is possible. For example, if three production lines are chosen as clusters, and workplaces sampled randomly within each, the clusters can be regarded as fixed levels of a factor and the data subjected to analysis of variance to determine whether there are significant differences between levels of that factor. What is sacrificed in cluster sampling is the ability to make population statements. Continuing this example, we could state that the lighting in line A is better than in lines B or C but still not be able to make statistically valid statements about the plant as a whole.

The Data-Collection Instrument

So far we have assumed that the instrument used to collect the data from the sample is based upon measured data where appropriate. While this is true of many audit instruments, this is not the only way to collect audit data. Interviews with participants (Drury 1990a), interviews and group meetings to locate potential errors (Fox 1992), and archival data such as injury of quality records (Mir 1982) have been used. All have potential uses with, as remarked earlier, a judicious range of methods often providing the appropriate composite audit system.

One consideration on audit technique design and use is the extent of computer involvement. Computers are now inexpensive, portable, and powerful and can thus be used to assist data collection, data verification, data reduction, and data analysis (Drury 1990a). With the advent of more intelligent interfaces, checklist questions can be answered from mouse-clicks on buttons or selection from menus, as well as the more usual keyboard entry. Data verification can take place at entry time by checking for out-of-limits data or odd data such as the ratio of luminance to illuminance, implying a reflectivity greater than 100%. In addition, branching in checklists can be made easier, with only valid follow-on questions highlighted. The checklist user’s manual can be built into the checklist software using context-sensitive help facilities, as in the EEAM checklist (Chervak and Drury 1995). Computers can, of course, be used for data reduction (e.g., finding the insulation value of clothing from a clothing inventory), data analysis, and results presentation.

With the case for computer use made, some cautions are in order. Computers are still bulkier than simple pencil-and-paper checklists. Computer reliability is not perfect, so inadvertent data loss is still a real possibility. Finally, software and hardware date much more rapidly than hard copy, so results safely stored on the latest media may be unreadable 10 years later. How many of us can still read punched cards or eight-inch floppy disks? In contrast, hard-copy records are still available from before the start of the computer era.

Checklists and Surveys

For many practitioners the proof of the effectiveness of an ergonomics effort lies in the ergonomic quality of the TOME systems it produces. A plant or office with appropriate human–machine function allocation, well-designed workplaces, comfortable environment, adequate placement / training, and inherently satisfying jobs almost by definition has been well served by human factors. Such a facility may not have human factors specialists, just good designers of environment, training, organization, and so on working independently, but this would generally be a rare occurrence. Thus, a checklist to measure such inherently ergonomic qualities has great appeal as part of an audit system.

Such checklists are almost as old as the discipline. Burger and deJong (1964) list four earlier checklists for ergonomic job analysis before going on to develop their own, which was commissioned by the International Ergonomics Association in 1961 and is usually known as the IEA checklist. It was based in part on one developed at the Philips Health Centre by G. J. Fortuin and provided in detail in Burger and deJong’s paper.

Checklists have their limitations, though. The cogent arguments put forward by Easterby (1967) provide a good early summary of these limitations, and most are still valid today. Checklists are only of use as an aid to designers of systems at the earliest stages of the process. By concentrating on simple questions, often requiring yes / no answers, some checklists may reduce human factors to a simple stimulus–response system rather than encouraging conceptual thinking. Easterby quotes Miller (1967): ‘‘I still find that many people who should know better seem to expect magic from analytic and descriptive procedures. They expect that formats can be filled in by dunces and lead to inspired insights. . . . We should find opportunity to exorcise this nonsense’’ (Easterby 1967, p. 554)

Easterby finds that checklists can have a helpful structure but often have vague questions, make nonspecified assumptions, and lack quantitative detail. Checklists are seen as appropriate for some parts of ergonomics analysis (as opposed to synthesis) and even more appropriate to aid operators (not ergonomists) in following procedural steps. This latter use has been well covered by Degani and Wiener (1990) and will not be further presented here.

Clearly, we should be careful, even 30 years on, to heed these warnings. Many checklists are developed, and many of these published, that contain design elements fully justifying such criticisms.

A checklist, like any other questionnaire, needs to have both a helpful overall structure and well- constructed questions. It should also be proven reliable, valid, sensitive, and usable, although precious few meet all of these criteria. In the remainder of this section, a selection of checklists will be presented as typical of (reasonably) good practice. Emphasis will be on objective, structure, and question design.

The IEA Checklist The IEA checklist (Burger and de Jong 1964) was designed for ergonomic job analysis over a wide range of jobs. It uses the concept of functional load to give a logical framework relating the physical load, perceptual load, and mental load to the worker, the environment, and the working methods / tools / machines. Within each cell (or subcell, e.g., physical load could be static or dynamic), the load was assessed on different criteria such as force, time, distance, occupational, medical, and psychological criteria. Table 1 shows the structure and typical questions. Dirken (1969) modified the IEA checklist to improve the questions and methods of re- cording. He found that it could be applied in a median time of 60 minutes per workstation. No data are given on evaluation of the IEA checklist, but its structure has been so influential that it included here for more than historical interest.

Position Analysis Questionnaire The PAQ is a structured job analysis questionnaire using worker-oriented elements (187 of them) to characterize the human behaviors involved in jobs (McCormick et al. 1969). The PAQ is structured into six divisions, with the first three representing the classic experimental psychology approach (information input, mental process, work output) and

the other three a broader sociotechnical view (relationships with other persons, job context, other job characteristics). Table 2 shows these major divisions, examples of job elements in each and the rating scales employed for response (McCormick 1979).

Construct validity was tested by factor analyses of databases containing 3700 and 2200 jobs, which established 45 factors. Thirty-two of these fit neatly into the original six-division framework, with the remaining 13 being classified as ‘‘overall dimensions.’’ Further proof of construct validity was based on 76 human attributes derived from the PAQ, rated by industrial psychologists and the ratings subjected to principal components analysis to develop dimensions ‘‘which had reasonably similar attribute profiles’’ (McCormick 1979, p. 204). Interreliability, as noted above, was 0.79, based on another sample of 62 jobs.

The PAQ covers many of the elements of concern to human factors engineers and has indeed much influenced subsequent instruments such as AET. With good reliability and useful (though perhaps dated), construct validity, it is still a viable instrument if the natural unit of sampling is the job. The exclusive reliance on rating scales applied by the analyst goes rather against current practice of comparison of measurements against standards or good practices.

AET (Arbeit the Arbeitswissenschaftliche Erhebungsverfahren zur Ta¨tikgkeitsanalyse) The AET, published in German (Landau and Rohmert 1981) and later in English (Rohmert and Landau 1983), is the job-analysis subsystem of a comprehensive system of work studies. It covers ‘‘the analysis of individual components of man-at-work systems as well as the description and scaling of their interdependencies’’ (Rohmert and Landau 1983, pp. 9–10). Like all good techniques, it starts from a model of the system (REFA 1971, referenced in Wagner 1989), to which is added Rohmert’s stress / strain concept. This latter sees strain as being caused by the intensity and duration of stresses impinging upon the operator’s individual characteristics. It is seen as useful in the analysis of re- quirements and work design, organization in industry, personnel management, and vocational coun- seling and research.

AET itself was developed over many years, using PAQ as an initial starting point. Table 3 shows the structure of the survey instrument with typical questions and rating scales. Note the similarity between AET’s job demands analysis and the first three categories of the PAQ and the scales used in AET and PAQ (Table 2).

Measurements of validity and reliability of AET are discussed by H. Luczak in an appendix to Landau and Rohment, although no numerical values are given. Cluster analysis of 99 AET records produced groupings which supported the AET constructs. Seeber et al. (1989) used AET along with

two other work-analysis methods on 170 workplaces. They found that AET provided the most dif- ferentiating aspects (suggesting sensitivity). They also measured postural complaints and showed that only the AET groupings for 152 female workers found significant differences between complaint levels, thus helping establish construct validity.

AET, like PAQ before it, has been used on many thousands of jobs, mainly in Europe. A sizable database is maintained that can be used for both norming of new jobs analyzed and analysis to test research hypotheses. It remain a most useful instrument for work analysis.

Ergonomics Audit Program (Mir 1982; Drury 1990a) This program was developed at the request of a multinational corporation to be able to audit its various divisions and plants as ergonomics programs were being instituted. The system developed was a methodology of which the workplace survey was one technique. Overall, the methodology used archival data or outcome mea- sures (injury reports, personnel records, productivity) and critical incidents to rank order departments within a plant. A cluster sampling of these departments gives either the ones with highest need (if the aim is to focus ergonomic effort) or a sample representative of the plant (if the objective is an audit). The workplace survey is then performed on the sampled departments.

The workplace survey was designed based on ergonomic aspects derived from a task / operator / machine / environment model of the person at work. Each aspect formed a section of the audit, and sections could be omitted if there were clearly not relevant, for example, manual materials-handling aspects for data-entry clerks. Questions within each section were based on standards, guidelines, and models, such as the NIOSH (1981) lifting equation, ASHRAE Handbook of Fundamentals for thermal aspects, and Givoni and Goldman’s (1972) model for predicting heart rate. Table 4 shows the major sections and typical questions.

Counts of discrepancies were used to evaluate departments by ergonomics aspect, while the mes- sages were used to alert company personnel to potential design changes. This latter use of the output as a training device for nonergonomic personnel was seen as desirable in a multinational company rapidly expanding its ergonomics program.

Reliability and validity have not been assessed, although the checklist has been used in a number of industries (Drury 1990a). The Workplace Survey has been included here because, despite its lack of measured reliability and validity, it shows the relationship between audit as methodology and checklist as technique.

ERGO, EEAM, and ERNAP (Koli et al. 1993; Chervak and Drury 1995) These check- lists are both part of complete audit systems for different aspects of civil aircraft hangar activities. They were developed for the Federal Aviation Administration to provide tools for assessing human factors in aircraft inspection (ERGO) and maintenance (EEAM) activities, respectively. Inspection and maintenance activities are nonrepetitive in nature, controlled by task cards issued to technicians at the start of each shift. Thus, the sampling unit is the task card, not the workplace, which is highly variable between task cards. Their structure was based on extensive task analyses of inspection and maintenance tasks, which led to generic function descriptions of both types of work (Drury et al. 1990). Both systems have sampling schemes and checklists. Both are computer based with initial data collection on either hard copy or direct into a portable computer. Recently, both have been combined into a single program (ERNAP) distributed by the FAA’s Office of Aviation Medicine. The structure of ERNAP and typical questions are given in Table 5.

As in Mir’s Ergonomics Audit Program, the ERNAP, the checklist is again modular, and the software allows formation of data files, selection of required modules, analysis after data entry is completed, and printing of audit reports. Similarly, the ERGO, EEAM, and ERNAP instruments use quantitative or Yes / No questions comparing the entered value with standards and good practice guides. Each takes about 30 minutes per task. Output is in the form of an audit report for each workplace, similar to the messages given by Mir’s Workplace Survey, but in narrative form. Output in this form was chosen for compatibility with existing performance and compliance audits used by the aviation maintenance community.

Reliability of a first version of ERGO was measured by comparing the output of two auditors on three tasks. Significant differences were found at P < 0.05 on all three tasks, showing a lack of interrater reliability. Analysis of these differences showed them to be largely due to errors on ques- tions requiring auditor judgment. When such questions were replaced with more quantitative ques- tions, the two auditors had no significant disagreements on a later test. Validity was measured using concurrent validation against six Ph.D. human factors engineers who were asked to list all ergonomic issues on a power plant inspection task. The checklist found more ergonomic issues than the human factors engineers. Only a small number of issues were raised by the engineers that were missed by the checklist. For the EEAM checklist, again an initial version was tested for reliability with two auditors, and it only achieved the same outcome for 85% of the questions. A modified version was tested and the reliability was considered satisfactory with 93% agreement. Validity was again tested against four human factors engineers, this time the checklist found significantly more ergonomic issues than the engineers without missing any issues they raised.

The ERNAP audits have been included here to provide examples of a checklist embedded in an audit system where the workplace is not the sampling unit. They show that non-repetitive tasks can be audited in a valid and reliable manner. In addition, they demonstrate how domain-specific audits can be designed to take advantage of human factors analyses already made in the domain.

Upper-Extremity Checklist (Keyserling et al. 1993) As its name suggests, this checklist is narrowly focused on biomechanical stresses to the upper extremities that could lead to cumulative trauma disorders (CTDs). It does not claim to be a full-spectrum analysis tool, but it is included here as a good example of a special-purpose checklist that has been carefully constructed and validated. The checklist (Table 6) was designed for use by management and labor to fulfill a requirement in the OSHA guidelines for meat-packing plants. The aim is to screen jobs rapidly for harmful exposures rather than to provide a diagnostic tool. Questions were designed based upon the biomechanical literature, structured into six sections. Scoring was based on simple presence or absence of a con- dition, or on a three-level duration score. As shown in Table 6, the two or three levels were scored as o, ·, or * depending upon the stress rating built into the questionnaire. These symbols represented insignificant, moderate, or substantial exposures. A total score could be obtained by summing mod- erate and substantial exposures.

The upper extremity checklist was designed to be biased towards false positives, that is, to be very sensitive. It was validated against detailed analyses of 51 jobs by an ergonomics expert. Each section (except the first, which only recorded dominant hand) was considered as giving a positive screening if at least one * rating was recorded. Across the various sections, there was reasonable agreement between checklist users and the expert analysis, with the checklist, being generally more sensitive, as was its aim. The original reference shows the findings of the checklist when applied to 335 manufacturing and warehouse jobs.

As a special-purpose technique in an area of high current visibility for human factors, the upper extremity checklist has proven validity, can be used by those with minimal ergonomics training for screening jobs, and takes only a few minutes per workstation. The same team has also developed

and validated a legs, trunk, and neck job screening procedure along similar lines (Keyserling et al. 1992).

Ergonomic Checkpoints The Workplace Improvement in Small Enterprises (WISE) methodology (Kogi 1994) was developed by the International Ergonomics Association (IEA) and the International Labour Office (ILO) to provide cost-effective solutions for smaller organizations. It consists of a training program and a checklist of potential low-cost improvements. This checklist, called ergonomics checkpoints, can be used both as an aid to discovery of solutions and as an audit tool for workplaces within an enterprise.

The 128-point checklist has now been published (Kogi and Kuorinka 1995). It covers the nine areas shown in Table 7. Each item is a statement rather than a question and is called a checkpoint. For each checkpoint there are four sections, also shown in Table 7. There is no scoring system as such; rather, each checkpoint becomes a point of evaluation of each workplace for which it is ap- propriate. Note that each checkpoint also covers why that improvement is important, and a description of the core issues underlying it. Both of these help the move from rule-based reasoning to knowledge- based reasoning as nonergonomists continue to use the checklist. A similar idea was embodied in the Mir (1982) ergonomic checklist.

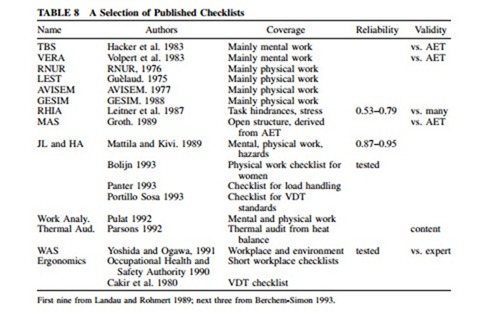

Other Checklists The above sample of successful audit checklists has been presented in some detail to provide the reader with their philosophy, structure, and sample questions. Rather then continue in the same vein, other interesting checklists are outlined in Table 8. Each entry shows the domain, the types of issues addressed, the size or time taken in use, and whether validity and reliability have been measured. Most textbooks now provide checklists, and a few of these are cited. No claim is made that Table 8 is comprehensive. Rather, it is rather a sampling with references so that readers can find a suitable match to their needs. The first nine entries in the table are conveniently colocated in Landau and Rohmert (1989). Many of their reliability and validity studies are reported in this publication. The next entries are results of the Commission of European Communities fifth ECSC program, reported in Berchem-Simon (1993). Others are from texts and original references. The author has not personally used all of these checklists and thus cannot specifically endorse them. Also, omission of a checklist from this table implies nothing about its usefulness.

Other Data-Collection Methods

Not all data come from checklists and questionnaires. We can audit a human factors program using outcome measures alone (e.g., Chapter 47). However, outcome measures such as injuries, quality, and productivity are nonspecific to human factors: many other external variables can affect them. An obvious example is changes in the reporting threshold for injuries, which can lead to sudden apparent increases and decreases in the safety of a department or plant. Additionally, injuries are (or should be) extremely rare events. Thus, to obtain enough data to perform meaningful statistical analysis may require aggregation over many disparate locations and / or time periods. In ergonomics audits, such outcome measures are perhaps best left for long-term validation or for use in selecting cluster samples.

Besides outcome measures, interviews represent a possible data-collection method. Whether di- rected or not (e.g., Sinclair 1990) they can produce critical incidents, human factors examples, or networks of communication (e.g., Drury 1990a), which have value as part of an audit procedure. Interviews are routinely used as part of design audit procedures in large-scale operations such as nuclear power plants (Kirwan 1989) or naval systems (Malone et al. 1988).

A novel interview-based audit system was proposed by Fox (1992) based on methods developed in British Coal (reported in Simpson 1994). Here an error-based approach was taken, using interviews and archival records to obtain a sampling of actual and possible errors. These were then classified using Reason’s (1990) active / latent failure scheme and orthogonally by Rasmussen’s (1987) skill-, rule-, knowledge-based framework. Each active error is thus a conjunction of skill / mistake / violation with skill / rule / knowledge. Within each conjunction, performance-shaping factors can be deduced and sources of management intervention listed. This methodology has been used in a number of mining-related studies: examples will be presented in Section 4.

Data Analysis and Presentation

Human factors as a discipline covers wide range of topics, from workbench height to function al- location in automated systems. An audit program can only hope to abstract and present a part of this range. With our consideration of sampling systems and data collection devices we have seen different ways in which an unbiased abstraction can be aided. At this stage the data consist of large numbers of responses to large numbers of checklist items, or detailed interview findings. How can, or should, these data be treated for best interpretation?

Here there are two opposing viewpoints: one is that the data are best summarized across sample units, but not across topics. This is typically the way the human factors professional community treats the data, giving summaries in published papers of the distribution of responses to individual items on the checklist. In this way, findings can be more explicit, for example that the lighting is an area that needs ergonomics effort, or that the seating is generally poor. Adding together lighting and seating discrepancies is seen as perhaps obscuring the findings rather than assisting in their interpre- tation.

The opposite viewpoint, in many ways, is taken by the business community. For some, an overall figure of merit is a natural outcome of a human factors audit. With such a figure in hand, the relative needs of different divisions, plants, or departments can be assessed in terms of ergonomic and en- gineering effort required. Thus, resources can be distributed rationally from a management level. This view is heard from those who work for manufacturing and service industries, who ask after an audit ‘‘How did we do?’’ and expect a very brief answer. The proliferation of the spreadsheet, with its ability to sum and average rows and columns of data, has encouraged people to do just that with audit results. Repeated audits fit naturally into this view because they can become the basis for monthly, quarterly, or annual graphs of ergonomic performance.

Neither view alone is entirely defensible. Of course, summing lighting and seating needs produces a result that is logically indefensible and that does not help diagnosis. But equally, decisions must be made concerning optimum use of limited resources. The human factors auditor, having chosen an unbiased sampling scheme and collected data on (presumably) the correct issues, is perhaps in an excellent position to assist in such management decisions. But so too are other stakeholders, primarily the workforce.

Audits, however, are not the only use of some of the data-collection tools. For example, the Keyserling et al. (1993) upper extremity checklist was developed specifically as a screening tool. Its objective was to find which jobs / workplaces are in need of detailed ergonomic study. In such cases, summing across issues for a total score has an operational meaning, that is, that a particular workplace needs ergonomic help.

Where interpretation is made at a deeper level than just a single number, a variety of presentation devices have been used. These must show scores (percent of workplaces, distribution of sound pres- sure levels, etc.) separately but so as to highlight broader patterns. Much is now known about separate vs. integrated displays and emergent features (e.g., Wickens 1992, pp. 121–122), but the traditional profiles and spider web charts are still the most usual presentation forms. Thus, Wagner (1989) shows the AVISEM profile for a steel industry job before and after automation. The nine different issues (rating factors) are connected by lines to show emergent shapes for the old and the new jobs. Landau and Rohmert’s (1981) original book on AET shows many other examples of profiles. Klimer et al. (1989) present a spider web diagram to show how three work structures influenced ten issues from the AET analysis. Mattila and Kivi (1989) present their data on the job load and hazard analysis system applied to the building industry in the form of a table. For six occupations, the rating on five different loads / hazards is presented as symbols of different sizes within the cells of the table.

There is little that is novel in the presentation of audit results: practitioners tend to use the standard tabular or graphical tools. But audit results are inherently multidimensional, so some thought is needed if the reader is to be helped towards an informed comprehension of the audit’s outcome.

Comments

Post a Comment