HUMAN–COMPUTER INTERACTION:COGNITIVE DESIGN

COGNITIVE DESIGN

Overview

Cognitive design, also referred to as cognitive engineering, is a multidisciplinary approach to system design that considers the analysis, design, and evaluation of interactive systems (Vicente 1999). Cognitive design involves developing systems through an understanding of human capabilities and limitations. It focuses on how humans process information and aims to identify users’ mental models, such that supporting metaphors and analogies can be identified and designed into systems (Eberts 1994). The general goal of cognitive design is thus to design interactive systems that are predictable (i.e., respond to the way users perceive, think, and act). Through the application of this approach, human–computer interaction has evolved into a relatively standard set of interaction techniques, including typing, pointing, and clicking. This set of ‘‘standard’’ interaction techniques is evolving, with a transition from graphical user interfaces to perceptual user interfaces that seek to more naturally interact with users through multimodal and multimedia interaction (Turk and Robertson 2000). In either case, however, these interfaces are characterized by interaction techniques that try to match user capabilities and limitations to the interface design.

Cognitive design efforts are guided by the requirements definition, user profile development, tasks analysis, task allocation, and usability goal setting that result from an intrinsic understanding gained from the target work environment. Although these activities are listed and presented in this order, they are conducted iteratively throughout the system development life cycle.

Requirements Definition

Requirements definition involves the specification of the necessary goals, functions, and objectives to be met by the system design (Eberts 1994; Rouse 1991). The intent of the requirements definition is to specify what a system should be capable of doing and the functions that must be available to users to achieve stated goals. Karat and Dayton (1995) suggest that developing a careful understand- ing of system requirements leads to more effective initial designs that require less redesign. Ethno- graphic evaluation can be used to develop a requirements definition that is necessary and complete to support the target domain (Nardi 1997).

Goals specify the desired system characteristics (Rouse 1991). These are generally qualitatively stated (e.g., automate functions, maximize use, accommodate user types) and can be met in a number of ways. Functions define what the system should be capable of doing without specifying the specifics of how the functions should be achieved. Objectives are the activities that the system must be able to accomplish in support of the specified functions. Note that the system requirements, as stated in terms of goals, functions, and objectives, can be achieved by a number of design alternatives. Thus, the requirements definition specifies what the system should be able to accomplish without specifying how this should be realized. It can be used to guide the overall design effort to ensure the desired end is achieved. Once a set of functional and feature requirements has been scoped out, an under- standing of the current work environment is needed in order to design systems that effectively support these requirements.

Contextual Task Analysis

The objective of contextual task analysis is to achieve a user-centered model of current work practices (Mayhew 1999). It is important to determine how users currently carry out their tasks, which indi- viduals they interact with, what tools support the accomplishment of their job goals, and the resulting products of their efforts. Formerly this was often achieved by observing a user or set of users in a laboratory setting and having them provide verbal protocols as they conducted task activities in the form of use cases (Hackos and Redish 1998; Karat 1988; Mayhew 1999; Vermeeren 1999). This approach, however, fails to take into consideration the influences of the actual work setting. Through an understanding of the work environment, designers can leverage current practices that are effective while designing out those that are ineffective. The results of a contextual task analysis include work environment and task analyses, from which mental models can be identified and user scenarios and task-organization models (e.g., use sequences, use flow diagrams, use workflows, and use hierarchies) can be derived (Mayhew 1999). These models and scenarios can then help guide the design of the system. As depicted in Figure 3, contextual task analysis consists of three main steps.

Effective interactive system design thus comes from a basis in direct observation of users in their work environments rather than assumptions about the users or observations of their activities in contrived laboratory settings (Hackos and Redish 1998). Yet contextual tasks analysis is sometimes overlooked because developers assume they know users or that their user base is too diverse, expen- sive, or time consuming to get to know. In most cases, however, observation of a small set of diverse users can provide critical insights that lead to more effective and acceptable system designs. For usability evaluations, Nielsen (1993) found that the greatest payoff occurs with just three users.

Background Information

It is important when planning a task analysis to first become familiar with the work environment. If analysts do not understand work practices, tools, and jargon prior to commencing a task analysis, they can easily get confused and become unable to follow the task flow. Further, if the first time users see analysts they have clipboard and pen in hand, users are likely to resist being observed or change their behaviors during observation. Analysts should develop a rapport with users by spending time with them, participating in their task activities when possible, and listening to their needs and concerns. Once users are familiar and comfortable with analysts and analysts are likewise versed on work practices, data collection can commence. During this familiarization, analysts can also capture data to characterize users.

Characterizing Users

It is ultimately the users who will determine whether a system is adopted into their lives. Designs that frustrate, stress, or annoy users are not likely to be embraced. Based on the requirements defi- nition, the objective of designers should be to develop a system that can meet specified user goals, functions, and objectives. This can be accomplished through an early and continual focus on the target user population (Gould et al. 1997). It is inconceivable that design efforts would bring products to market without thoroughly determining who the user is. Yet developers, as they expedite system development to rush products to market, are often reluctant to characterize users. In doing so, they may fail to recognize the amount of time they spend speculating upon what users might need, like, or want in a product (Nielsen 1993). Ascertaining this information directly by querying representative users can be both more efficient and more accurate.

Information about users should provide insights into differences in their computer experience, domain knowledge, and amount of training on similar systems (Wixon and Wilson 1997). The results can be summarized in a narrative format that provides a user profile of each intended user group (e.g., primary users, secondary users, technicians and support personnel). No system design, however, will meet the requirements of all types of users. Thus, it is essential to identify, define, and charac- terize target users. Separate user profiles should be developed for each target user group. The user profiles can then feed directly into the task analysis by identifying the user groups for which tasks must be characterized (Mayhew 1999).

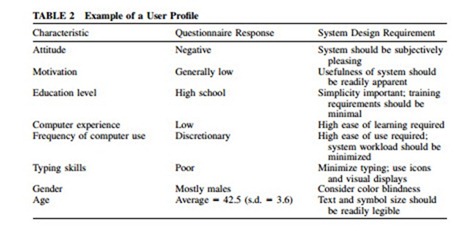

Mayhew (1999) presents a step-by-step process for developing user profiles. First, a determination of user categories is made by identifying the intended user groups for the target system. When developing a system for an organization, this information may come directly from preexisting job categories. Where those do not exist, marketing organizations often have target user populations identified for a given system or product. Next, the relevant user characteristics must be identified. User profiles should be specified in terms of psychological (e.g., attitudes, motivation), knowledge and experience (e.g., educational background, years on job), job and task (e.g., frequency of use), and physical (e.g., stature, visual impairments) characteristics (Mayhew 1999; Nielsen 1993; Wixon and Wilson 1997). While many of these user attributes can be obtained via user profile questionnaires or interviews, psychological characteristics may be best identified via ethnographic evaluation, where a sense of the work environment temperament can be obtained. Once this information is obtained, a summary of the key characteristics for each target user group can be developed, highlighting their implications to the system design. By understanding these characteristics, developers can better an- ticipate such issues as learning difficulties and specify appropriate levels of interface complexity. System design requirements involve an assessment of the required levels of such factors as ease of learning, ease of use, level of satisfaction, and workload for each target user group (see Table 2)

Individual differences within a user population should also be acknowledged (Egan 1988; Hackos and Redish 1998). While users differ along many dimensions, key areas of user differences have been identified that significantly influence their experience with interactive systems. Users may differ

in such attributes as personality, physical or cognitive capacity, motivation, cultural background, education, and training. Users also change over time (e.g., transitioning from novice to expert). By acknowledging these differences, developers can make informed decisions on whether or not to support them in their system designs. For example, marketing could determine which group of in- dividuals it would be most profitable to target with a given system design.

Collecting and Analyzing Data

Contextual task analysis focuses on the behavioral aspects of a task, resulting in an understanding of the general structure and flow of task activities (Mayhew 1999; Nielsen 1993; Wixon and Wilson 1997). This analysis identifies the major tasks and their frequency of occurrence. This can be com- pared to cognitive task analysis, which identifies the low-level perceptual, cognitive, and motor actions required during task performance (Card et al. 1983; Corbett et al. 1997). Beyond providing an un- derstanding of tasks and workflow patterns, the contextual task analysis also identifies the primary objects or artifacts that support the task, information needs (both inputs and outputs), workarounds that have been adopted, and exceptions to normal work activities. The result of this analysis is a task flow diagram with supporting narrative depicting user-centered task activities, including task goals; information needed to achieve these goals; information generated from achieving these goals; and task organization (i.e., subtasks and interdependencies).

Task analysis thus aims to structure the flow of task activities into a sequential list of functional elements, conditions of transition from one element to the next, required supporting tools and artifacts, and resulting products (Sheridan 1997a). There are both formal and informal techniques for task analysis (see Table 3). Such an analysis can be driven by formal models such as TAKD (task analysis for knowledge description; see Diaper 1989) or GOMS (goals, operators, methods, and selection rules; see Card et al. 1983) or through informal techniques such as interviews, observation and shadowing, surveys, and retrospectives and diaries (Jeffries 1997). With all of these methods, typically

a domain expert is somehow queried about their task knowledge. It may be beneficial to query a range of users, from novice to expert, to identify differences in their task practices. In either case, it is important to select individuals that can readily verbalize how a task is carried out to serve as informants (Ebert 1994).

When a very detailed task analysis is required, formal techniques such as TAKD (Diaper 1989; Kirwan and Ainsworth 1992) or GOMS (Card et al. 1983) can be used to delineate task activities (see Chapter 39), TAKD uses knowledge-representation grammars (i.e., sets of statements used to described system interaction) to represent task-knowledge in a task-descriptive hierarchy. This tech- nique is useful for characterizing complex tasks that lack fine-detail cognitive activities (Eberts 1994). GOMS is a predictive modeling technique that has been used to characterize how humans interact with computers. Through a GOMS analysis, task goals are identified, along with the operators (i.e., perceptual, cognitive, or motor acts) and methods (i.e., series of operators) to achieve those goals and the selection rules used to elect between alternative methods. The benefit of TAKD and GOMS is that they provide an in-depth understanding of task characteristics, which can be used to quantify the benefits in terms of consistency (TAKD) or performance time gains (GOMS) of one design vs. another (see Gray et al. 1993; McLeod and Sherwood-Jones 1993 for examples of the effective use of GOMS in design). This deep knowledge, however, comes at a great cost in terms of time to conduct the analysis. Thus, it is important to determine the level of task analysis required for informed design. While formal techniques such as GOMS can lead to very detailed analyses (i.e., at the perceive, think, act level), often such detail is not required for effective design. Jeffries (1997) suggests that one can loosely determine the right level of detail by determining when further decomposition of the task would not reveal any ‘‘interesting’’ new subtasks that would enlighten the design. If detailed task knowledge is not deemed requisite, informal task-analysis techniques should be adopted.

Interviews are the most common informal technique to gather task information (Jeffries 1997; Kirwan and Ainsworth 1992; Meister 1985). In this technique, informants are asked to verbalize their strategies, rationale, and knowledge used to accomplish task goals and subgoals (Ericsson and Simon 1980). As each informant’s mental model of the tasks they verbalize is likely to differ, it is advan- tageous to interview at least two to three informants to identify the common flow of task activities. Placing the informant in the context of the task domain and having him or her verbalize while conducting tasks affords more complete task descriptions while providing insights on the environment the task is performed within. It can sometimes be difficult for informants to verbalize their task performance because much of it may be automatized (Eberts 1994). When conducting interviews, it is important to use appropriate sampling techniques (i.e., sample at the right time with enough individuals), avoid leading questions, and follow up with appropriate probe questions (Nardi 1997). While the interviewer should generally abstain from interfering with task performance, it is sometimes necessary to probe for more detail when it appears that steps or subgoals are not being communicated. Eberts (1994) suggests that the human information-processing model can be used to structure verbal protocols and determine what information is needed and what is likely being left out.

Observation during task activity or shadowing workers throughout their daily work activities are time-consuming task-analysis techniques, but they can prove useful when it is difficult for informants to verbalize their task knowledge (Jeffries 1997). These techniques can also provide information about the environment in which tasks are performed, such as tacit behaviors, social interactions, and physical demands, which are difficult to capture with other techniques (Kirwan and Ainsworth 1992).

While observation and shadowing can be used to develop task descriptions, surveys are particu- larly useful task-analysis tools when there is significant variation in the manner in which tasks are performed or when it is important to determine specific task characteristics, such as frequency (Jeffries 1997; Nielsen 1993). Surveys can also be used as a follow-on to further clarify task areas described via an interview. Focused observation, shadowing, retrospectives, and diaries are also useful for clarifying task areas. With retrospectives and diaries, an informant is asked to provide a retrospective soon after completing a task or to document his or her activities after several task events, the latter being a diary.

Whether formal or informal techniques are used, the objective of the task analysis is to identify the goals of users and determine the techniques they use to accomplish these goals. Norman (1988) provides a general model of the stages users go through when accomplishing goals (see Figure 4). Stanton (1998) suggests that there are three main ways in which this process can go awry: by users forgetting a required action, executing an errant action, or misperceiving or misinterpreting the current state of the system. In observing users of a vending machine, Verhoef (1988) indeed found that these types of errors occur during system interaction. In this case study, users of the vending machine failed to perceive information presented by the machine, performed actions in the wrong order, and misinterpreted tasks when they were not clearly explained or when incomplete information was provided.

By understanding the stages of goal accomplishment and the related errors that can occur, de- velopers can more effectively design interactive systems. For example, by knowing that users perceive and interpret the system state once an action has been executed, designers can understand why it is

essential to provide feedback (Nielsen 1993) to the executed action. Knowing that users often specify alternative methods to achieve a goal or change methods during the course of goal seeking, designers can aim to support diverse approaches. Further, recognizing that users commit errors emphasizes the need for undo functionality.

The results from a task analysis provide insights into the optimal structuring of task activities and the key attributes of the work environment that will directly affect interactive system design (Mayhew 1999). The analysis enumerates the tasks that users may want to accomplish to achieve stated goals through the preparation of a task list or task inventory (Hackos and Redish 1998; Jeffries 1997). A model of task activities, including how users currently think about, discuss, and perform their work, can then be devised based on the task analysis. To develop task-flow models, it is important to consider the timing, frequency, criticality, difficulty, and responsible individual of each task on the list. In seeking to conduct the analysis at the appropriate level of detail, it may be beneficial initially to limit the task list and associated model to the primary 10–20 tasks that users perform. Once developed, task models can be used to determine the functionality necessary to support in the system design. Further, once the task models and desired functionality are characterized, use scenarios (i.e., concrete task instances, with related contextual [i.e., situational] elements and stated resolutions) can be developed that can be used to drive both the system design and evaluation (Jeffries 1997).

Constructing Models of Work Practices

While results from the task analysis provide task-flow models, they also can provide insights on the manner in which individuals model these process flows (i.e., mental models). Mental models syn- thesize several steps of a process into an organized unit (Allen 1997). An individual may model several aspects of a given process, such as the capabilities of a tool or machine, expectations of coworkers, or understandings of support processes (Fischer 1991). These models allow individuals to predict how a process will respond to a given input, explain a process event, or diagnose the reasons for a malfunction. Mental models are often incomplete and inaccurate, however, so under- standings based on these models can be erroneous.

As developers design systems, they will develop user models of target user groups (Allen 1997). These models should be relevant (i.e., able to make predictions as users would), accurate, adaptable to changes in user behavior, and generalizable. Proficient user modeling can assist developers in designing systems that interact effectively with users. Developers must recognize that users will both come to the system interaction with preconceived mental models of the process being automated and develop models of the automated system interaction. They must thus seek to identify how users represent their existing knowledge about a process and how this knowledge fits together in learning and performance so that they can design systems that engender the development of an accurate mental model of the system interaction (Carroll and Olson 1988). By understanding how users model pro- cesses, developers can determine how users currently think and act, how these behaviors can be supported by the interactive system design when advantageous, and how they can be modified and improved upon via system automation.

Task Allocation

In moving toward a system design, once tasks have been analyzed and associated mental models characterized, designers can use this knowledge to address the relationship between the human and the interactive system. Task allocation is a process of assigning the various tasks identified via the task analysis to agents (i.e., users), instruments (e.g., interactive systems) or support resources (e.g., training, manuals, cheat sheets). It defines the extent of user involvement vs. computer automation in system interaction (Kirwan and Ainsworth 1992). In some system-development efforts, formal task allocation will be conducted; in others, it is a less explicit yet inherent part of the design process.

While there are many systematic techniques for conducting task analysis (see Kirwan and Ains- worth 1992), the same is not true of task allocation (Sheridan 1997a). Further, task allocation is complicated by the fact that seldom are tasks or subtasks truly independent, and thus their interde- pendence must be effectively designed into the human–system interaction. Rather than a deductive assignment of tasks to human or computer, task allocation thus becomes a consideration of the multitude of design alternatives that can support these interdependencies. Sheridan (1997a,b) delin- eates a number of task allocation considerations that can assist in narrowing the design space (see Table 4).

In allocating tasks, one must also consider what will be assigned to support resources. If the system is not a walk-up-and-use system but one that will require learning, then designers must identify what knowledge is appropriate to allocate to support resources (e.g., training courses, manuals, online help).

Training computer users in their new job requirements and how the technology works has often been a neglected element in office automation. Many times the extent of operator training is limited to reading the manual and learning by trial and error. In some cases, operators may have classes that go over the material in the manual and give hands-on practice with the new equipment for limited periods of time. The problem with these approaches is that there is usually insufficient time for users to develop the skills and confidence to adequately use the new technology. It is thus essential to determine what online resources will be required to support effective system interaction.

Becoming proficient in hardware and software use takes longer than just the training course time. Often several days, weeks, or even months of daily use are needed to become an expert depending on the difficulty of the application and the skill of the individual. Appropriate support resources should be designed into the system to assist in developing this proficiency. Also, it is important to remember that each individual learns at his or her own pace and therefore some differences in proficiency will be seen among individuals. When new technology is introduced, training should tie in skills from the former methods of doing tasks to facilitate the transfer of knowledge. Sometimes new skills clash with those formerly learned, and then more time for training and practice is necessary to achieve good results. If increased performance or labor savings are expected with the new tech- nology, it is prudent not to expect results too quickly. Rather, it is wise to develop the users’ skills completely if the most positive results are to be achieved.

Competitive Analysis and Usability Goal Setting

Once the users and tasks have been characterized, it is sometimes beneficial to conduct a competitive analysis (Nielsen 1993). Identifying the strengths and weaknesses of competitive products or existing systems allows means to leverage strengths and resolve identified weaknesses.

After users have been characterized, a task analysis performed, and, if necessary, a competitive analysis conducted, the next step in interactive system design is usability goal setting (Hackos and Redish 1998; Mayhew 1999; Nielsen 1993; Wixon and Wilson 1993). Usability objectives generally focus around effectiveness (i.e., the extent to which tasks can be achieved), intuitiveness (i.e., how learnable and memorable the system is), and subjective perception (i.e., how comfortable and satisfied users are with the system) (Eberts 1994; Nielsen 1993; Shneiderman 1992; Wixon and Wilson 1997). Setting such objectives will ensure that the usability attributes evaluated are those that are important for meeting task goals; that these attributes are translated into operational measures; that the attributes are generally holistic, relating to overall system / task performance; and that the attributes relate to specific usability objectives.

Because usability is assessed via a multitude of potentially conflicting measures, often equal weights cannot be given to every usability criterion. For example, to gain subjective satisfaction, one might have to sacrifice task efficiency. Developers should specify usability criteria of interest and provide operational goals for each metric. These metrics can be expressed as absolute goals (i.e., in terms of an absolute quantification) or as relative goals (i.e., in comparison to a benchmark system or process). Such metrics provide system developers with concrete goals to meet and a means to measure usability. This information is generally documented in the form of a usability attribute table and usability specification matrix (see Mayhew 1999).

User Interface Design

While design ideas evolve throughout the information-gathering stages, formal design of the inter- active system commences once relevant information has been obtained. The checklist in Table 5 can be used to determine whether the critical information items that support the design process have been addressed. Readied with information, interactive system design generally begins with an initial def- inition of the design and evolves into a detailed design, from which iterative cycles of evaluation and improvement transpire (Martel 1998).

Initial Design Definition

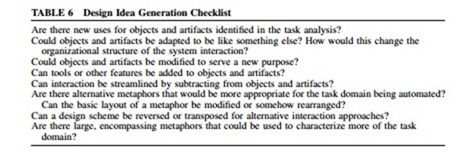

Where should one commence the actual design of a new interactive system? Often designers look to existing products within their own product lines or competitors’ products. This is a sound practice because it maintains consistency with existing successful products. This approach may be limiting, however, leading to evolutionary designs that lack design innovations. Where can designers obtain the ideas to fuel truly innovative designs that uniquely meet the needs of their users? Ethnographic evaluations can lead to many innovative design concepts that would never be realized in isolation of the work environment (Mountford 1990). The effort devoted to the early characterization of users and tasks, particularly when conducted in the context of the work environment, often is rewarded in terms of the generation of innovative design ideas. Mountford (1990) has provided a number of techniques to assist in eliciting design ideas based on the objects, artifacts, and other information gathered during the contextual task analysis (see Table 6).

To generate a multitude of design ideas, it is beneficial to use a parallel design strategy (Nielsen 1993), where more than one designer sets out in isolation to generate design concepts. A low level

of effort (e.g., a few hours to a few days) is generally devoted to this idea-generation stage. Story- boarding via paper prototypes is often used at this stage because it is easy to generate and modify and is cost effective (Martel 1998). Storyboards provide a series of pictures representing how an interface may look.

Detailed Design

Once design alternatives have been storyboarded, the best aspects of each design can be identified and integrated into a detailed design concept. The detailed design can be realized through the use of several techniques, including specification of nouns and verbs that represent interface objects and actions, as well as the use of metaphors (Hackos and Redish 1998). The metaphors can be further refined via use scenarios, use sequences, use flow diagrams, use workflows, and use hierarchies. Storyboards and rough interface sketches can support each stage in the evolution of the detailed design.

Objects and Actions Workplace artifacts, identified via the contextual task analysis, become the objects in the interface design (Hackos and Redish 1998). Nouns in the task flow also become interface objects, while verbs become interface actions. Continuing the use of paper proto- typing, the artifacts, nouns, and verbs from the task flows and related models can each be specified on a sticky note and posted to the working storyboard. Desired attributes for each object or action can be delineated on the notes. The objects and actions should be categorized and any redundancies eliminated. The narratives and categories can generate ideas on how interface objects should look, how interface actions should feel, and how these might be structurally organized around specified categories.

Metaphors Designers often try to ease the complexity of system interaction by ground- ing interface actions and objects and related tasks and goals in a familiar framework known as a metaphor (Neale and Carroll 1997). A metaphor is a conceptual set of familiar terms and associations (Erickson 1990). If designed into a user interface, it can be used to incite users to relate what they already know about the metaphoric concept to the system interaction, thereby enhancing the learn- ability of the system (Carroll and Thomas 1982).

The purpose of developing interface metaphors is to provide users with a useful orienting frame- work to guide their system interaction. The metaphor provides insights into the spatial properties of the user interface and the manner in which they are derived and maintained by interaction objects and actions (Carroll and Mack 1985). It stimulates systematic system interaction that may lead to greater understanding of these spatial properties. Through this understanding, users should be able to tie together a configural representation (or mental model) of the system to guide their interactions (Kay 1990).

An effective metaphor will both orient and situate users within the system interaction. It will aid without attracting attention or energy away from the automated task process (Norman 1990). Pro- viding a metaphor should help focus users to critical cues and away from irrelevant distractions. The metaphor should also help to differentiate the environment and enhance visual access (Kim and Hirtle 1995). Parunak (1989) accomplished this in a hypertext environment by providing between-path mechanisms (e.g., backtracking capability and guided tours), annotation capabilities that allow users to designate locations that can be accessed directly (e.g., bookmarks in hypertext), and links and filtering techniques that simplify a given topology.

It is important to note that a metaphor does not have to be a literal similarity (Ortony 1979) to be effective. In fact, Gentner (1983) and Gentner and Clement (1988) suggest that people seek to identify relational rather than object attribute comparisons in comprehending metaphors. Based on Gentner’s structure-mapping theory, the aptness of a metaphor should increase with the degree to which its interpretation is relational. Thus, when interpreting a metaphor, people should tend to extend relational rather than object attribute information from the base to the target. The learning efficacy of a metaphor, however, is based on more than the mapping of relational information between two objects (Carroll and Mack 1985). Indeed, it is imperative to consider the open-endedness of metaphors and leverage the utility of not only correspondence, but also noncorrespondence in generating ap- propriate mental models during learning. Nevertheless, the structure-mapping theory can assist in providing a framework for explaining and designing metaphors for enhancing the design of interactive systems.

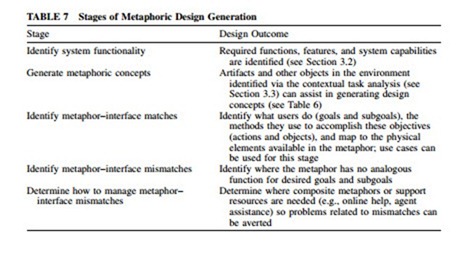

Neale and Carroll (1997) have provided a five-stage process from which design metaphors can be conceived (see Table 7). Through the use of this process, developers can generate coherent, well- structured metaphoric designs.

Use Scenarios, Use Sequences, Use Flow Diagrams, Use Workflows, and Use Hierarchies Once a metaphoric design has been defined, its validity and applicability to task goals and subgoals can be identified via use scenarios, use sequences, use flow diagrams, use workflows, and use hierarchies (see Figure 5) (Hackos and Redish 1998). Use scenarios are narrative descriptions of how the goals and subgoals identified via the contextual task analysis will be realized via the interface design. Beyond the main flow of task activities, they should address task exceptions, indi- vidual differences, and anticipated user errors. In developing use scenarios, it can be helpful to reference task allocation schemes (see Section 3.3.5). These schemes can help to define what will be achieved by users via the interface, what will be automated, and what will be rendered to support resources in the use scenarios.

If metaphoric designs are robust, they should be able to withstand the interactions demanded by a variety of use scenarios with only modest modifications required. Design concepts to address required modifications should evolve from the types of interactions envisioned by the scenarios. Once the running of use scenarios fails to generate any required design modifications, their use can be terminated.

If parts of a use scenario are difficult for users to achieve or designers to conceptualize, use sequences can be used. Use sequences delineate the sequence of steps required for a scenario sub- section being focused upon. They specify the actions and decisions required of the user and the interactive system, the objects needed to achieve task goals, and the required outputs of the system interaction. Task workarounds and exceptions can be addressed with use sequences to determine if the design should support these activities. Providing detailed sequence specifications highlights steps that are not appropriately supported by the design and thus require redesign.

When there are several use sequences supported by a design, it can be helpful to develop use flow diagrams for a defined subsection of the task activities. These diagrams delineate the alternative paths and related intersections (i.e., decision points) users encounter during system interaction. The representative entities that users encounter throughout the use flow diagram become the required objects and actions for the interface design.

When interactive systems are intended to yield savings in the required level of information exchange, use workflows can be used. These flows provide a visualization of the movement of users or information objects throughout the work environment. They can clearly denote if a design concept will improve the information flow. Designers can first develop use workflows for the existing system interaction and then eliminate, combine, resequence, and simplify steps to streamline the flow of information.

Use hierarchies can be used to visualize the allocation of tasks among workers. By using sticky notes to represent each node in the hierarchy, these representations can be used to demonstrate the before- and after-task allocations. The benefits of the new task allocation engendered by the interactive system design should be readily perceived in hierarchical flow changes.

Design Support Developers can look to standards and guidelines to direct their design efforts. Standards focus on advising the look of an interface, while guidelines address the usability of the interface (Nielsen 1993). Following standards and guidelines can lead to systems that are easy to learn and use due to a standardized look and feel (Buie 1999). Developers must be careful, however, not to follow these sources of design support blindly. An interactive system can be designed strictly according to standards and guidelines yet fail to physically fit users, support their goals and tasks, and integrate effectively into their environment (Hackos and Redish 1998).

Guidelines aim at providing sets of practical guidance for developers (Brown 1988; Hackos and Redish 1998; Marcus 1997; Mayhew 1992). They evolve from the results of experiments, theory- based predictions of human performance, cognitive psychology and ergonomic design principles, and experience. Several different levels of guidelines are available to assist system development efforts, including general guidelines applicable to all interactive systems, as well as category-specific (i.e., voice vs. touch screen interfaces) and product-specific guidelines (Nielsen 1993).

Standards are statements (i.e., requirements or recommendations) about interface objects and actions (Buie 1999). They address the physical, cognitive, and affective nature of computer interac- tion. They are written in general and flexible terms because they must be applicable to a wide variety of applications and target user groups. International (e.g., ISO 9241), national (e.g., ANSI, BSI),

military and government (e.g., MIL-STD 1472D), and commercial (e.g., Common User Access by IBM) entities write them. Standards are the preferred approach in Europe. The European Community promotes voluntary technical harmonization through the use of standards (Rada and Ketchell 2000).

Buie (1999) has provided recommendations on how to use standards that could also apply to guidelines. These include selecting relevant standards; tailoring these select standards to apply to a given development effort; referring to and applying the standards as closely as possible in the inter- active system design; revising and refining the select standards to accommodate new information and considerations that arise during development; and inspecting the final design to ensure the system design complies with the standards where feasible. Developing with standards and guidelines does not preclude the need for evaluation of the system. Developers will still need to evaluate their systems to ensure they adequately meet users’ needs and capabilities.

Storyboards and Rough Interface Sketches The efforts devoted to the selection of a metaphor or composite metaphor and the development of use scenarios, use sequences, use flow diagrams, use workflows, and use hierarchies result in a plethora of design ideas. Designers can brainstorm over design concepts, generating storyboards of potential ideas for the detailed design (Vertelney and Booker 1990). Storyboards should be at the level of detail provided by use scenarios and workflow diagrams (Hackos and Redish 1998). The brainstorming should continue until a set of satisfactory storyboard design ideas has been achieved. The favored set of ideas can then be refined into a design concept via interface sketches. Sketches of screen designs and layouts are generally at the level of detail provided by use sequences. Cardboard mockups and Wizard of Oz techniques (Newell et al. 1990), the latter of which enacts functionality that is not readily available, can be used at this stage to assist in characterizing designs.

Prototyping

Prototypes of favored storyboard designs are developed. These are working models of the preferred designs (Hackos and Redish 1998; Vertelney and Booker 1990). They are generally developed with easy-to-use toolkits (e.g., Macromedia Director, Toolbook, SmallTalk, or Visual Basic) or simpler tools (e.g., hypercard scenarios, drawing programs, even paper or plastic mockups) rather than high- level programming languages. The simpler prototyping tools are easy to generate and modify and cost effective; however, they demonstrate little if anything in the way of functionality, may present concepts that cannot be implemented, and may require a ‘‘Wizard’’ to enact functionality. The toolkits provide prototypes that look and feel more like the final product and demonstrate the feasibility of desired functionality; however, they are more costly and time consuming to generate. Whether high- or low-end techniques are used, prototypes provide means to provide cost-effective, concrete design concepts that can be evaluated with target users (usually three to six users per iteration) and readily modified. They prevent developers from exhausting extensive resources in formal development of products that will not be adopted by users. Prototyping should be iterated until usability goals are met.

Usability Evaluation of Human–Computer Interaction

Usability evaluation focuses on gathering information about the usability of an interactive system so that this information can be used to focus redesign efforts via iterative design. While the ideal approach is to consider usability from the inception of the system development process, often it is considered in later development stages. In either case, as long as developers are committed to im- plementing modifications to rectify the most significant issues identified via usability-evaluation tech- niques, the efforts devoted to usability are generally advantageous. The benefits of usability evaluation include, but are not limited to, reduced system redesign costs, increased system productivity, enhanced user satisfaction, decreased user training, and decreased technical support (Nielsen 1993; Mayhew 1999).

There are several different usability-evaluation techniques. In some of these techniques, the in- formation may come from users of the system (through the use of surveys, questionnaires, or specific measures from the actual use of the system), while in others, information may come from usability experts (using design walk-throughs and inspection methods). In still others, there may be no obser- vations or user testing involved at all because the technique involves a theory-based (e.g., GOMS modeling) representation of the user (Card et al. 1983). Developers need to be able to select a method that meets their needs or combine or tailor methods to meet their usability objectives and situation. Usability-evaluation techniques have generally been classified as follows (Karat 1997; Preece 1993):

• Analytic / theory based (e.g., cognitive task analysis; GOMS)

• Expert evaluation (e.g., design walk-throughs; heuristic evaluations)

• Observational evaluation (e.g., direct observation; video; verbal protocols)

• Survey evaluation (e.g., questionnaires; structured interviews)

• Psychophysiological measures of subjective perception (e.g., EEGs; heart rate; blood pressure)

• Experimental evaluation (e.g., quantitative data; compare design alternatives)

There are advantages and disadvantages to each of these evaluative techniques (see Table 8) (Preece 1993, Karat 1997). Thus, a combination of methods is often used in practice. Typically, one would first perform an expert evaluation (e.g., heuristic evaluation) of a system to identify the most obvious usability problems. Then user testing could be conducted to identify remaining problems that were missed in the first stages of evaluation. In general, a number of factors need to be considered when selecting a usability-evaluation technique or a combination thereof (see Table 9) (Dix et al. 1993; Nielsen 1993; Preece 1993).

As technology has evolved, there has been a shift in the technoeconomic paradigm, allowing for more universal access of computer technology (Stephanidis and Salvendy 1998). Thus, individuals with diverse abilities, requirements, and preferences are now regularly utilizing interactive products. When designing for universal access, participation of diverse user groups in usability evaluation is essential. Vanderheiden (1997) has suggested a set of principles for universal design that focuses on the following: simple and intuitive use; equitable use; perceptible information; tolerance for error; accommodation of preferences and abilities; low physical effort; and space for approach and use. Following these principles should ensure effective design of interactive products for all user groups.

While consideration of ergonomic and cognitive factors can generate effective interactive system designs, if the design has not taken into consideration the environment in which the system will be used, it may still fail to be adopted. This will be addressed in the next section.

Comments

Post a Comment