LINEAR PROGRAMMING UNDER UNCERTAINTY:ROBUST OPTIMIZATION

ROBUST OPTIMIZATION

As described in the preceding sections, sensitivity analysis provides an important way of dealing with uncertainty about the true values of the parameters in a linear programming model. The main purpose of sensitivity analysis is to identify the sensitive parameters, namely, those parameters that cannot be changed without changing the optimal solution. This is valuable information since these are the parameters that need to be estimated with special care to minimize the risk of obtaining an erroneous optimal solution.

However, this is not the end of the story for dealing with linear programming under uncertainty. The true values of the parameters may not become known until considerably later when the optimal solution (according to the model) is actually implemented. There- fore, even after estimating the sensitive parameters as carefully as possible, significant es- timation errors can occur for these parameters along with even larger estimation errors for the other parameters. This can lead to unfortunate consequences. Perhaps the optimal so- lution (according to the model) will not be optimal after all. In fact, it may not even be feasible.

The seriousness of these unfortunate consequences depends somewhat on whether there is any latitude in the functional constraints in the model. It is useful to make the fol- lowing distinction between these constraints.

A soft constraint is a constraint that actually can be violated a little bit without very serious complications. By contrast, a hard constraint is a constraint that must be satisfied.

Robust optimization is especially designed for dealing with problems with hard constraints.

For very small linear programming problems, it often is not difficult to work around the complications that the optimal solution with respect to the model may no longer be

optimal, and may not even be feasible, when the time comes to implement the solution. If the model contains only soft constraints, it may be OK to use a solution that is not quite feasible (according to the model). Even if some or all of the constraints are hard con- straints, the situation depends upon whether it is possible to make a last-minute adjust- ment in the solution being implemented. (In some cases, the solution to be implemented will be locked into place well in advance.) If this is possible, it may be easy to see how to make a small adjustment in the solution to make it feasible. It may even be easy to see how to adjust the solution a little bit to make it optimal.

However, the situation is quite different when dealing with the larger linear programming problems that are typically encountered in practice. For example, Selected Reference 1 at the end of the chapter describes what happened when dealing with the problems in a library of 94 large linear programming problems (hundreds or thousands of constraints and variables). It was assumed that the parameters could be randomly in er- ror by as much as 0.01 percent. Even with such tiny errors throughout the model, the op- timal solution was found to be infeasible in 13 of these problems and badly so for 6 of the problems. Furthermore, it was not possible to see how the solution could be adjusted to make it feasible. If all the constraints in the model are hard constraints, this is a seri- ous problem. Therefore, considering that the estimation errors for the parameters in many realistic linear programming problems often would be much larger than 0.01 percent— perhaps even 1 percent or more—there clearly is a need for a technique that will find a very good solution that is virtually guaranteed to be feasible.

This is where the technique of robust optimization can play a key role.

The goal of robust optimization is to find a solution for the model that is vir- tually guaranteed to remain feasible and near optimal for all plausible combina- tions of the actual values for the parameters.

This is a daunting goal, but an elaborate theory of robust optimization now has been developed, as presented in Selected References 1 and 3. Much of this theory (including various extensions of linear programming) is beyond the scope of this book, but we will introduce the basic concept by considering the following straightforward case of inde- pendent parameters.

Robust Optimization with Independent Parameters

This case makes four basic assumptions:

1. Each parameter has a range of uncertainty surrounding its estimated value.

2. This parameter can take any value between the minimum and maximum specified by this range of uncertainty.

3. This value is uninfluenced by the values taken on by the other parameters.

4. All the functional constraints are in either or 2:: form.

To guarantee that the solution will remain feasible regardless of the values taken on by these parameters within their ranges of uncertainty, we simply assign the most conserva- tive value to each parameter as follows:

• For each functional constraint in form, use the maximum value of each aij and the minimum value of bi.

• For each functional constraint in 2:: form, do the opposite of the above.

• For an objective function in maximization form, use the minimum value of each cj.

• For an objective function in minimization form, use the maximum value of each cj.

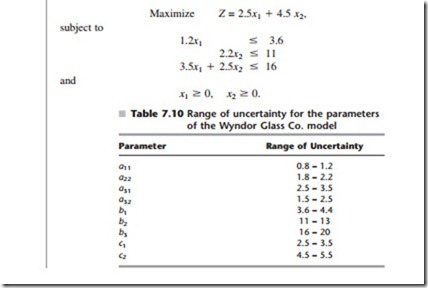

We now will illustrate this approach by returning again to the Wyndor example.

Example

Continuing the prototype example for linear programming first introduced in Sec. 3.1, the management of the Wyndor Glass Company now is negotiating with a wholesale distributor that specializes in the distribution of doors and windows. The goal is to arrange with this distributor to sell all of the special new doors and windows (referred to as Products 1 and 2 in Sec. 3.1) after their production begins in the near future. The distributor is interested but also is concerned that the volume of these doors and windows may be too small to justify this special arrangement. Therefore, the distributor has asked Wyndor to specify the minimum production rates of these products (measured by the number of batches produced per week) that Wyndor will guarantee, where Wyndor would need to pay a penalty if the rates fall below these minimum amounts.

Because these special new doors and windows have never been produced before, Wyndor management realizes that the parameters of their linear programming model formulated in Sec. 3.2 (and based on Table 3.1) are only estimates. For each product, the production time per batch in each plant (the aij) may turn out to be significantly different from the estimates given in Table 3.1. The same is true for the estimates of the profit per batch (the cj). Arrangements currently are being made to reduce the production rates of certain current products in order to free up production time in each plant for the two new products. Therefore, there also is some uncertainty about how much production time will be available in each of the plants (the bi) for the new products.

After further investigation, Wyndor staff now feels confident that they have identified

the minimum and maximum quantities that could be realized for each of the parameters of the model after production begins. For each parameter, the range between this mini- mum and maximum quantity is referred to as its range of uncertainty. Table 7.10 shows the range of uncertainty for the respective parameters.

Applying the procedure for robust optimization with independent parameters outlined in the preceding subsection, we now refer to these ranges of uncertainty to determine the value of each parameter to use in the new linear programming model. In particular, we choose the maximum value of each aij and the minimum value of each bi and cj. The re- sulting model is shown below:

This model can be solved readily, including by the graphical method. Its optimal solution is x1 = 1 and x2 = 5, with Z = 25 (a total profit of $25,000 per week). Therefore, Wyndor management now can give the wholesale distributor a guarantee that Wyndor can provide the distributor with a minimum of one batch of the special new door (Product 1) and five batches of the special new window (Product 2) for sale per week.

Extensions

Although it is straightforward to use robust optimization when the parameters are inde- pendent, it frequently is necessary to extend the robust optimization approach to other cases where the final values of some parameters are influenced by the values taken on by other parameters. Two such cases commonly arise.

One case is where the parameters in each column of the model (the coefficients of a single variable or the right-hand sides) are not independent of each other but are inde- pendent of the other parameters. For example, the profit per batch of each product (the cj) in the Wyndor problem might be influenced by the production time per batch in each plant (the aij) that is realized when production begins. Therefore, a number of scenarios regarding the values of the coefficients of a single variable need to be considered. Simi- larly, by shifting some personnel from one plant to another, it might be possible to in- crease the production time available per week in one plant by decreasing this quantity in another plant. This again could lead to a number of possible scenarios to be considered regarding the different sets of values of the bi. Fortunately, linear programming still can be used to solve the resulting robust optimization model.

The other common case is where the parameters in each row of the model are not in- dependent of each other but are independent of the other parameters. For example, by shifting personnel and equipment in Plant 3 for the Wyndor problem, it might be possi- ble to decrease either a31 or a32 by increasing the other one (and perhaps even change b3 in the process). This would lead to considering a number of scenarios regarding the val- ues of the parameters in that row of the model. Unfortunately, solving the resulting ro- bust optimization model requires using something more complicated than linear programming.

We will not delve further into these or other cases. Selected References 1 and 3 pro- vide details (including even how to apply robust optimization when the original model is something more complicated than a linear programming model).

One drawback of the robust optimization approach is that it can be extremely con- servative in tightening the model far more than is realistically necessary. This is espe- cially true when dealing with large models with hundreds or thousands (perhaps even millions) of parameters. However, Selected Reference 4 provides a good way of largely overcoming this drawback when the uncertain parameters are some of the aij and either all these parameters are independent or the only dependencies are within single columns of aij. The basic idea is to recognize that the random variations from the estimated val- ues of the uncertain aij shouldn't result in every variation going fully in the direction of making it more difficult to achieve feasibility. Some of the variations will be negligible (or even zero), some will go in the direction of making it easier to achieve feasibility, and only some will go very far in the opposite direction. Therefore, it should be safe to assume that only a modest number of the parameters will go strongly in the direction of making it more difficult to achieve feasibility. Doing so will still lead to a feasible so- lution with very high probability. Being able to choose this modest number also provides the flexibility to achieve the desired trade-off between obtaining a very good solution and virtually ensuring that this solution will turn out to be feasible when the solution is implemented.

Comments

Post a Comment